Case Study

Resolving Data Quality Issues for Successful BI Projects

In a recent

Data Integrity Trends & Insights

report, data analysts revealed that 70% of professionals who struggle to trust their data cite data quality issues as the primary reason impacting their projects. Accordingly, a staggering number of BI project failures are increasingly linked to poor data quality.

In today’s data and AI-driven industrial landscape, Business Intelligence (BI) is essential for informed decision-making and efficient resource management.

The success of any BI project relies on the quality of its data, which depends on the complexity, scale, and systems managing data workloads. Data quality issues can derail projects, leading to wasted time, money, and resources. How can organizations tackle common data challenges and ensure BI project success?

Here, we examine common data quality issues in business intelligence, their effects on BI projects, and offer practical solutions to maintain high data quality.

Understanding Common Data Quality Issues in Business Intelligence

Why Do BI Projects Fail Due to Data Quality?

Data quality issues stem from a lack of proper data governance and insufficient data cleansing. Common issues include inaccurate data, incomplete records, and inconsistencies across data sources. The quality of data used in daily operations is crucial to a business's credibility and performance. When data isn't managed or validated correctly, it leads to discrepancies causing BI project failures.

For example, in the retail industry, incorrect inventory data can lead to poor forecasting, resulting in lost sales or overstocking. Similarly, in healthcare, inaccurate patient data can snowball into faulty insights, affecting patient care and hospital management. When these issues are compounded in a BI project, they create bottlenecks that not only drain resources but also erode trust, derailing the very purpose of data-driven decision-making.

7 Common Data Quality Issues Impacting BI Projects

Inconsistent data from various sources, incomplete records, fragmented datasets, and a lack of real-time validation all contribute to inaccurate insights and flawed decision-making. Addressing these common pitfalls is crucial for organizations seeking to maximize the benefits of BI implementation.

Across industries, several common data quality issues often surface. Here are the most common data quality challenges affecting Business Intelligence.

Data Quality Challenges in Business Intelligence: Lessons from Top Industries

While many corporations have vast data, it's how they manage it—through data cleansing, validation, and governance—that drives BI project success. To avoid BI project failures, businesses must prioritize data quality from the start, using modern assessment tools and automation to ensure their data is accurate and actionable. Let's turn to a few case studies that highlight the importance of data quality for ensuring BI success.

• Walmart’s Data Quality Struggles in Demand Forecasting

In 2022, retail giant Walmart experienced notable disruptions in their BI processes due to inaccurate inventory data. Despite its extensive technological infrastructure, Walmart faced difficulties maintaining real-time inventory accuracy across multiple warehouses and retail outlets. The issue arose from outdated data quality metrics, where lagging data entries and inconsistent data validation processes led to poor demand forecasting. As a result, Walmart struggled with both overstocking and understocking in key categories, impacting sales margins and customer satisfaction. This costly oversight revealed how crucial data governance and data cleansing are for large retailers reliant on BI systems for inventory management.

The core problem was fragmented data across several distribution centers, leading to delayed insights. Implementing better quality assessment and automated data validation processes would have mitigated these issues, ensuring data accuracy for real-time decisions in demand forecasting.

• UnitedHealth Group’s Challenges with Fragmented Patient Data.

UnitedHealth Group, a leading healthcare provider, faced significant challenges prior to implementing standardized BI strategies in 2023, primarily due to fragmented patient data. The organization had accumulated vast amounts of information from various sources, including hospitals, clinics, and third-party providers. Without a unified data governance framework, this data was often inconsistent, incomplete, or outdated. The lack of standardized data cleansing and validation processes resulted in inaccurate diagnoses and treatment reports, directly impacting patient care. Investing in BI strategies was crucial for addressing these issues and improving data quality across the board.

Post investments in BI in 2023, UnitedHealth Group achieved impressive growth, with earnings from operations reaching $32.4 billion, a 13.8% increase from the previous year. This surge was attributed to the company’s successful standardization of advanced BI strategies, driving improved decision-making across the organization.

The lesson here is that a robust data governance model, integrated with automated data quality assessment tools, is essential to ensure consistency and accuracy in healthcare BI projects.

Where to Address Data Quality Issues

To address data quality issues impacting BI performance, there are 3 critical stages where you can intervene and eliminate common BI project bottlenecks. They are:

1. At the Source: Fix issues at data entry. This is the most effective, but also requires higher intervention and process changes.

2. During ETL: If fixing at the source isn’t possible, resolve issues during the ETL process by applying smart algorithms and rules to clean the data.

3. At the Metadata Layer: Apply rules and logic within a metadata layer to fix issues during the query stage, without modifying the underlying data.

Best Practices for Resolving Data Quality Problems in BI

Mastering data quality is the key to unlocking flawless BI performance—here’s how to tackle common issues at every stage, from the source to real-time governance.

1. Metadata Management: Define common data formats and rules for consistent data quality across domains.

2. Profile Your Data: Regularly profile your data to measure integrity and ensure it adheres to set rules.

3. Create Data Quality Dashboards: Build dashboards to monitor key data quality metrics and trends in real-time.

4. Set Quality Alerts: Set alerts for data quality thresholds, so issues can be addressed promptly.

5. Maintain an Issues Log: Keep a log of data quality issues to track patterns and apply preventative measures.

6. Implement Data Governance: Develop effective data governance strategies for BI success with clear roles, policies, and KPIs for ongoing data quality management.

Leveraging BI Data Quality Tools for Project Success

To achieve BI project success, organizations must tackle data quality issues head-on, leveraging technology to uphold a robust data governance framework for ongoing improvement. Here are some actionable solutions and technologies that help prevent BI project failures:

1. Data Governance: Using platforms like Collibra or Informatica Data Governance, businesses can establish clear data governance frameworks to ensure consistent and accurate data management. These tools help automate policy enforcement, ensuring that data is uniformly entered, stored, and processed across departments.

2. Data Cleansing: Solutions like Trifacta and Talend Data Quality are industry-standard tools for data cleansing. These tools automate the process of removing duplicates, correcting inaccuracies, and keeping data up to date. Incorporating these into the BI pipeline ensures clean, high-quality data throughout the project lifecycle.

3. Data Quality Assessment Tools: Tools like IBM InfoSphere and Ataccama provide robust data quality assessment capabilities. They monitor and flag data quality issues early, offering insights through data quality metrics like accuracy and completeness, allowing teams to address errors before they escalate.

4. Automated Data Validation: With solutions like Talend Data Fabric or DataRobot, businesses can use data quality automation to continuously validate incoming data in real-time. These tools ensure that only accurate, consistent, and validated data enters the BI system, minimizing the risk of poor-quality data affecting project outcomes.

A business’s technology investment is crucial for sustaining high data quality over time. Tools like Talend and Informatica automatically validate data against predefined quality metrics, offering continuous feedback and alerts for discrepancies. By using the latest technologies combining various data quality tools, businesses can prevent BI project failures, improve operational efficiency, and gain valuable insights from reliable data.

Assuring Data Quality for Your BI Projects

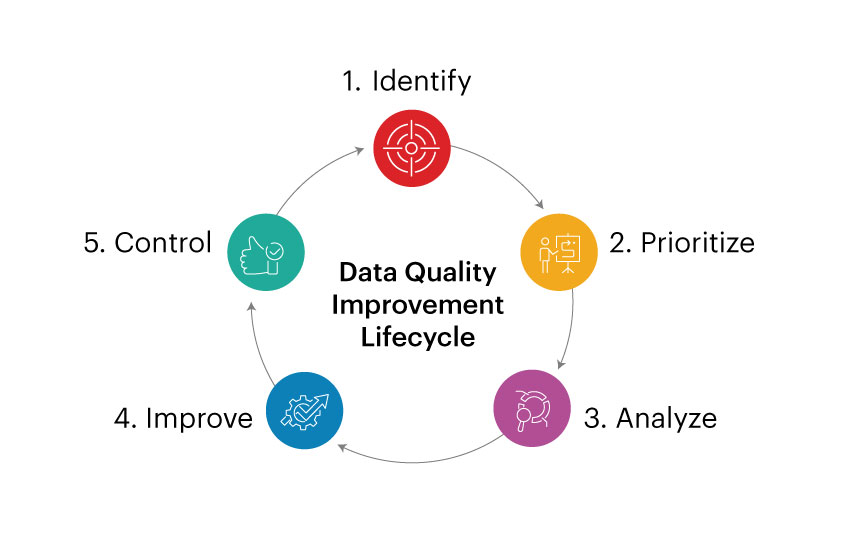

At Intelliswift, we know that effective data management is the foundation of successful BI projects. We support businesses throughout the data quality improvement lifecycle by providing comprehensive tools and expertise for data profiling, cleansing, and validation, ensuring accurate and reliable data. Our continuous monitoring and governance solutions enable businesses to maintain high data quality standards over time.

Our approach combines advanced technology with industry expertise to ensure data integrity at every stage of the BI process. By leveraging the latest and best-in-class tech stacks for quality assurance and continuous monitoring, we help businesses overcome data quality challenges and unlock the full potential of their BI initiatives.

Ready to take your BI projects to the next level? Create your tailored data governance framework with Intelliswift and manage your data with complete confidence.